Optimizing Student Assessment:

Bridging the Gap Between Traditional and Online Teaching

EdTech

Web app

Complex system

B2B

User Research & Pain Points

Uncovering online teaching challenges

Many teaching platforms rely on final submissions, leaving educators without insight into students’ learning process or creative decisions along the way. This is particularly problematic in subjects where technical execution and creative choices matter just as much as the end result.

Competitive Analysis

Exploring existing online teaching assessments

Comparing four major competitors, I found that most now utilize AI to streamline course setup, with some also leveraging it for basic quiz creation.

Ideation & Use Case

Integrating AI-powered feedback with instructor refinement

Step 1

AI-Assisted Review

The platform automatically analyzes elements such as composition, exposure, and adherence to specific technical guidelines. This process can be iterative—students receive immediate technical feedback and have the opportunity to refine their submission before presenting it to the instructor.

Step 2

Instructor Feedback

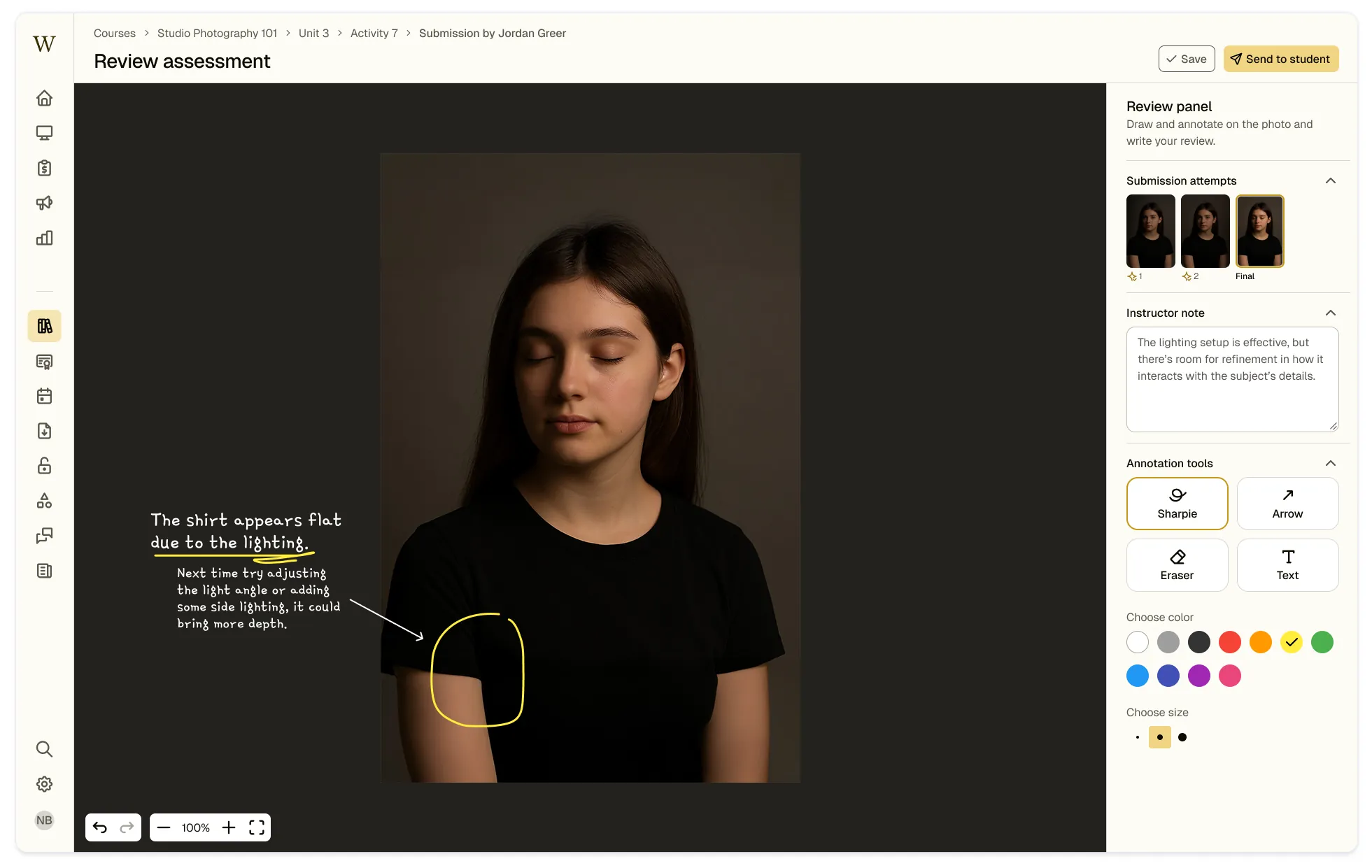

The instructor is left to evaluate the final submission, providing feedback on creative choices using annotation tools for guidance. Key areas can be highlighted to help students improve. Previous attempts remain visible to assess students' learning progress.

This method ensures a balanced, efficient assessment system that enhances online learning without burdening educators.

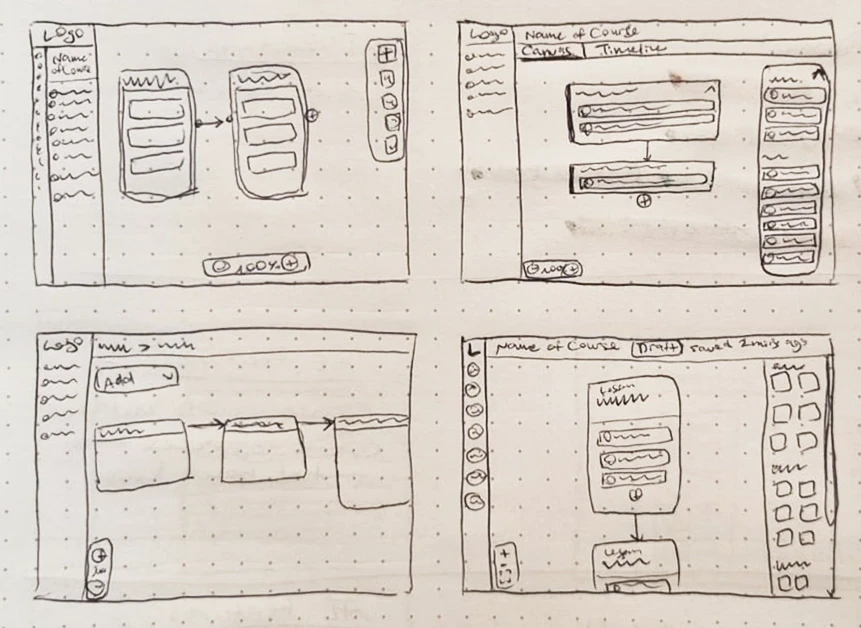

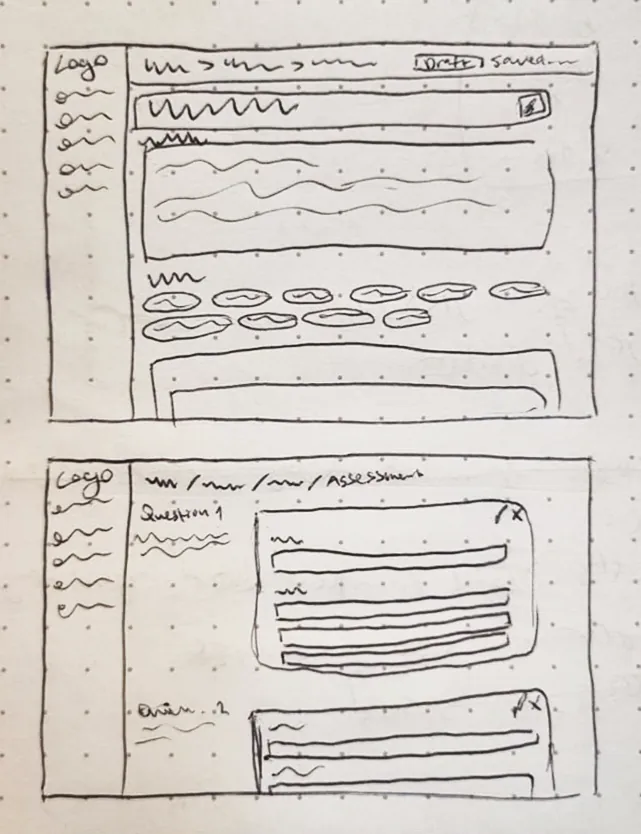

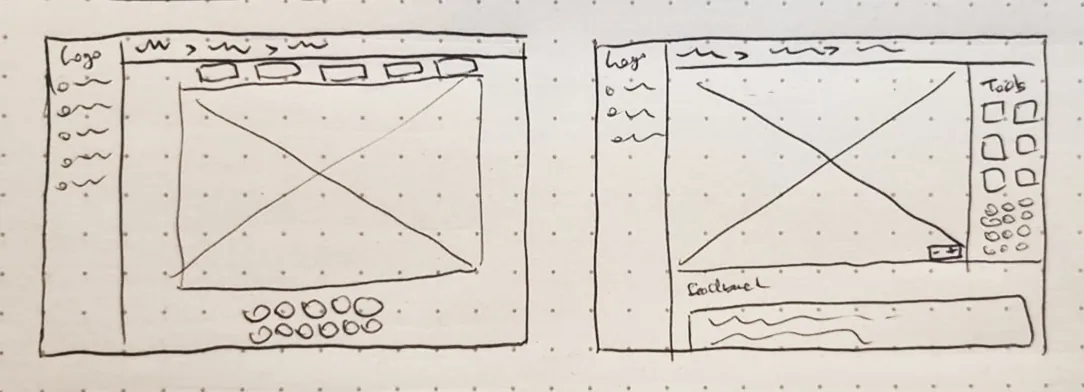

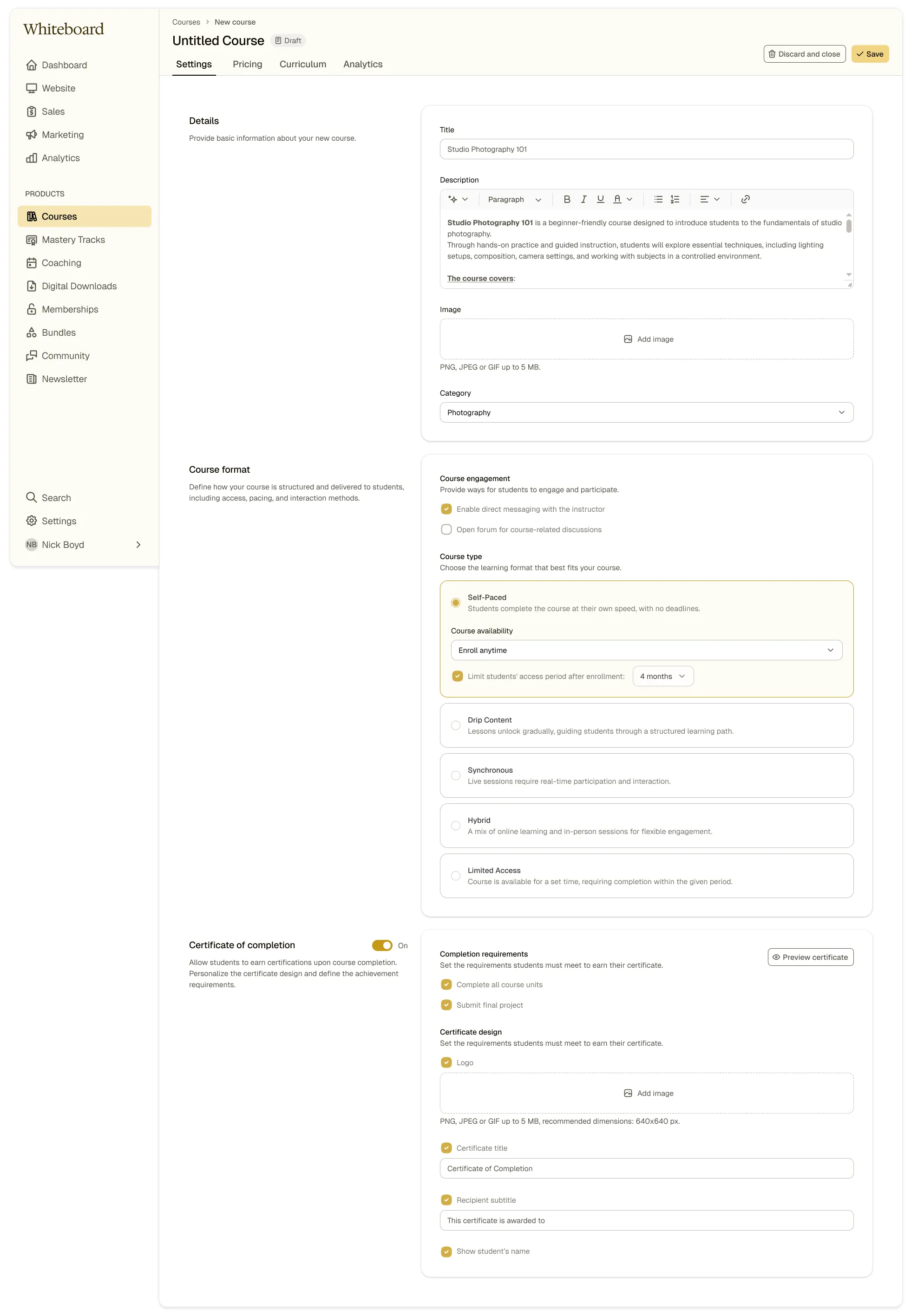

Sketches & High Fidelity Screens

Design iterations towards high-fidelity

Course > Settings

Course > Curriculum

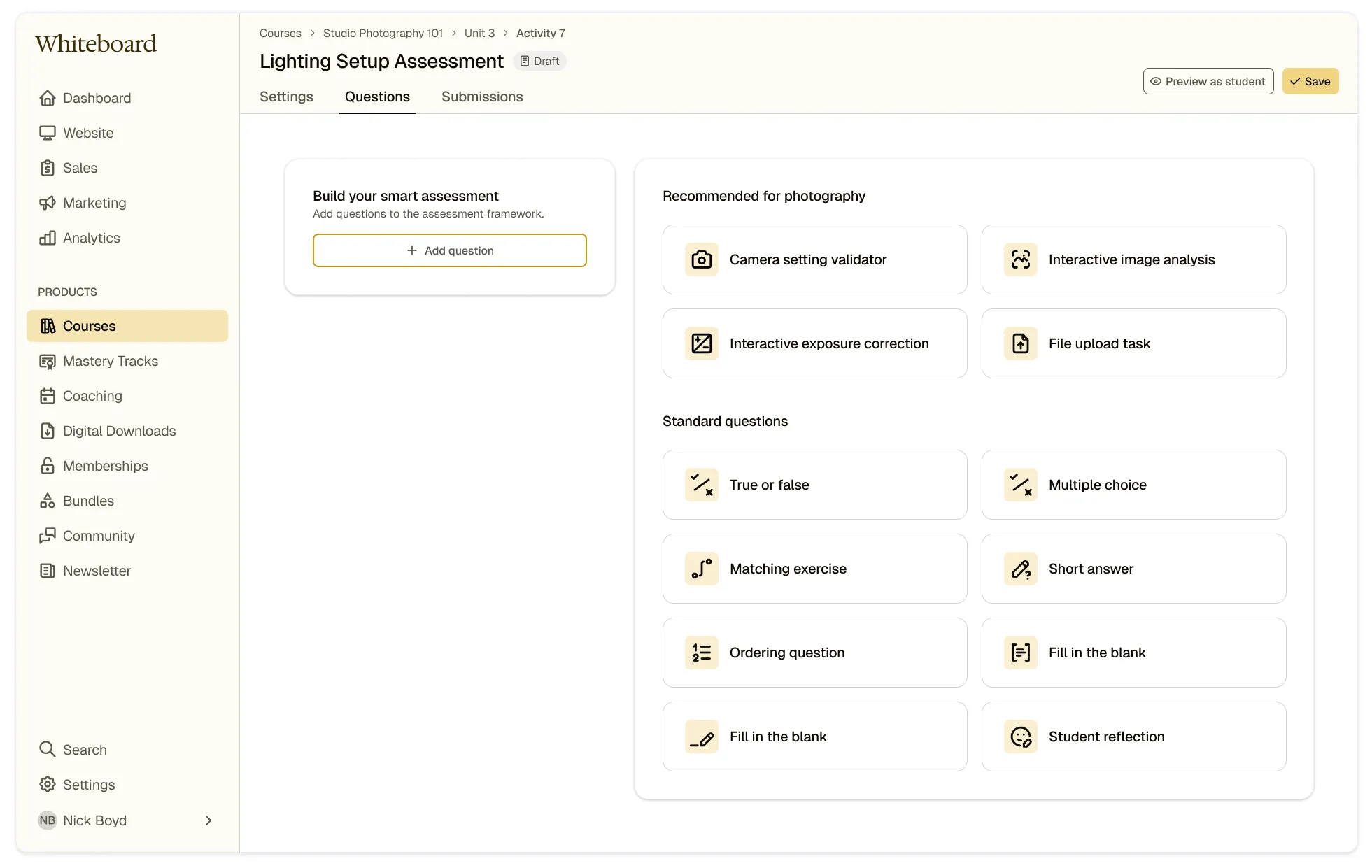

Smart Assessment > Questions (empty state)

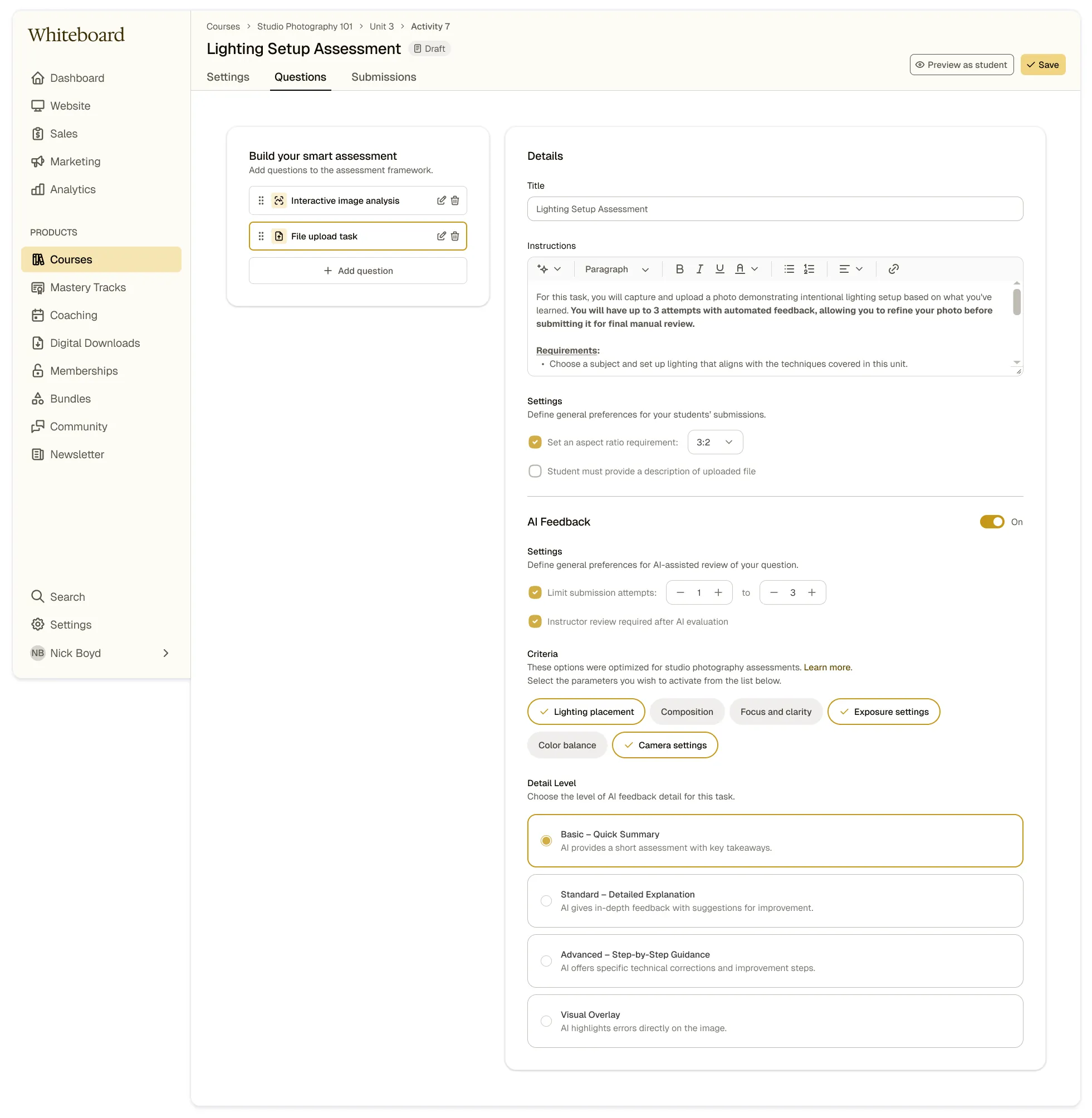

Smart Assessment > Questions

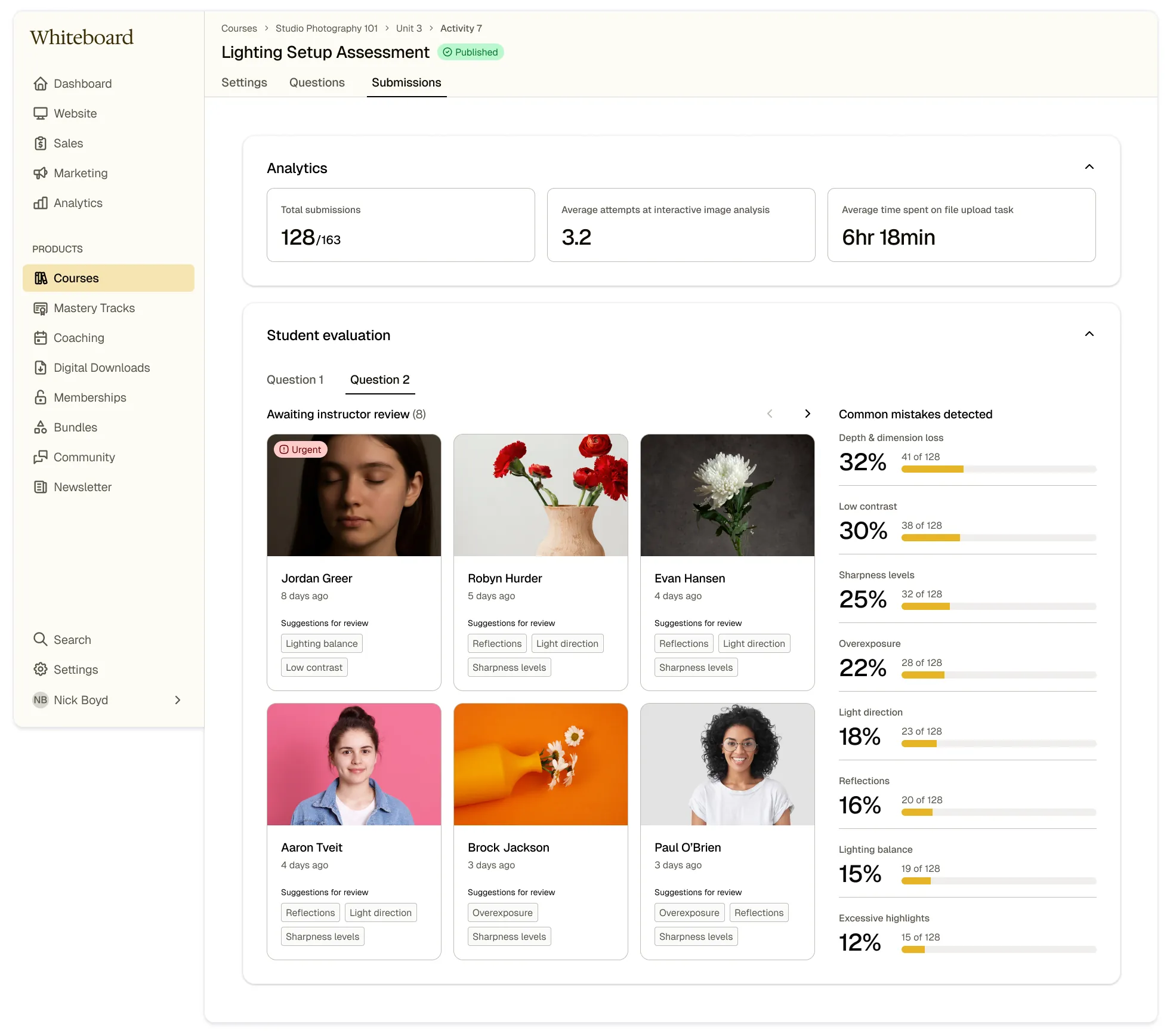

Smart Assessment > Submissions

Smart Assessment > Review

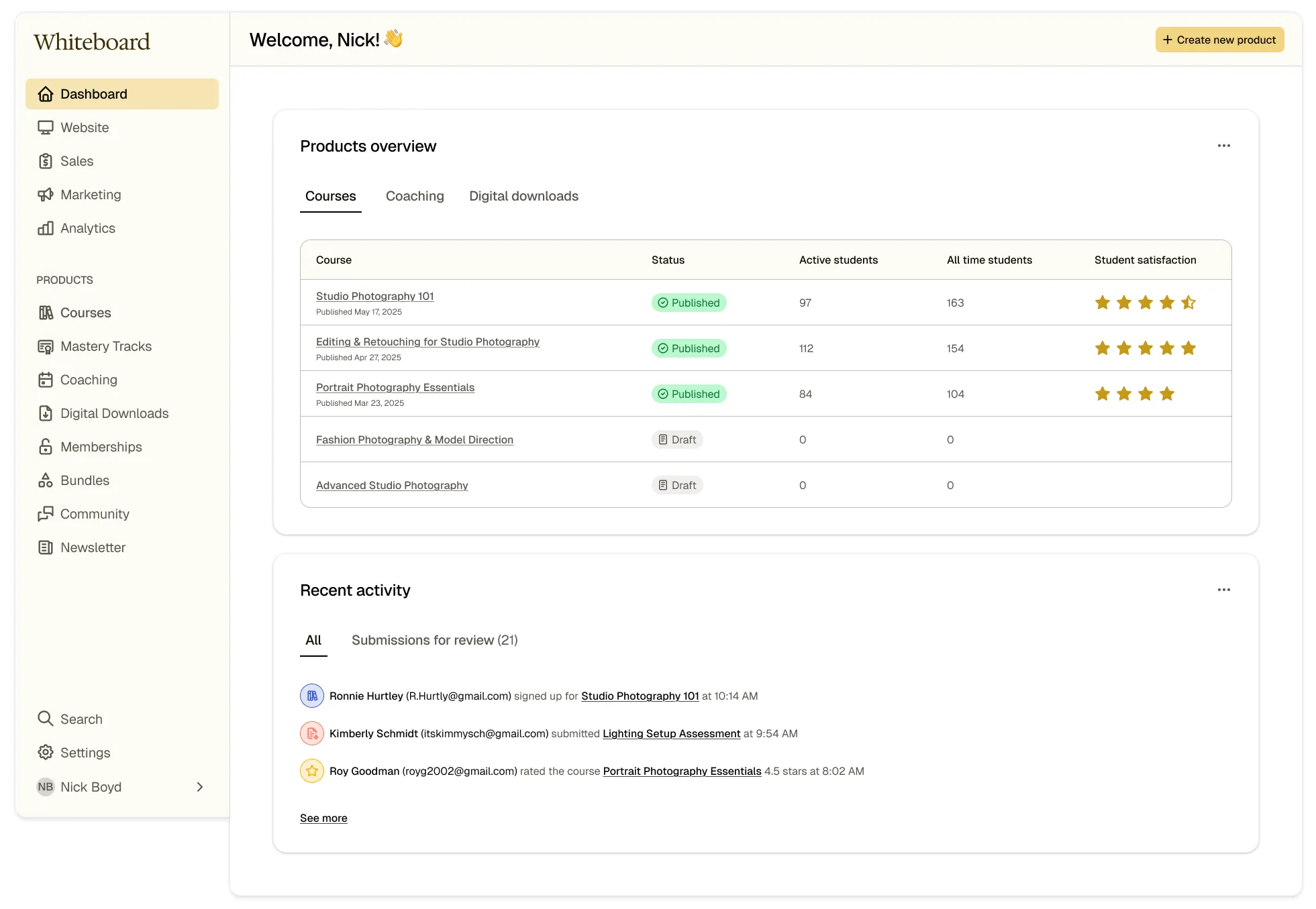

Main Dashboard

KPIs

How I Would Measure the Effectiveness of Smart Assessments

To assess the effectiveness of the AI assessment framework, several key performance indicators will need to be taken into account.

Next Steps

My takeaways & learnings

This case study focused on the photography discipline and its unique challenges in online teaching. With further development, this approach to remote student assessment could extend to additional fields that require innovative solutions.

Some of the assessment tools I introduced may be applicable to other disciplines, and existing AI capabilities could be further explored. As AI continues to evolve, more industries may benefit from similar advancements in assessment and learning.

Thanks for scrolling 🙌

View next project >